Summary: There does seem to be something wrong with scientific reporting, but whose fault is it? Gary Gutting wrote about it, and now I'll write about him writing about it.

Gary Gutting on what science (and scientific reporting) is doing, and why it seems to go so wrong so often

BLOT: (26 Apr 2013 - 03:36:38 PM)

Gary Gutting on what science (and scientific reporting) is doing, and why it seems to go so wrong so often

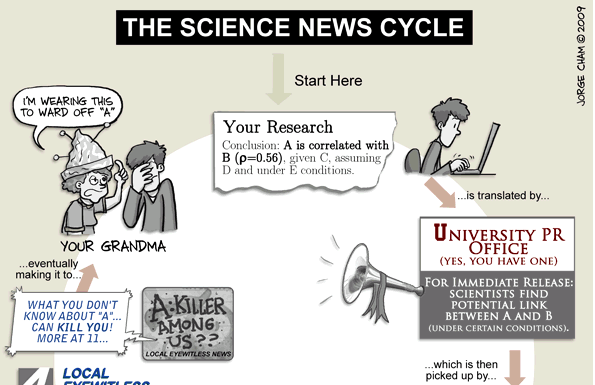

All you really need to know about scientific reporting is generally summed up with the PhD comic titled "The Science News Cycle", excerpted below:

Results intended to study very specific elements generally lose specificity in each level of reporting, with conditions and margins of error being dropped off early and specific elements transformed to general ones later in the cycle. A study that says red hair gives you super powers 5% of the time if you are exposed to more than twelve space units of astroradiation has about a 50/50 chance to becoming "Why Red Hair is the Best!" on CNN or "Pastor Slams Pro-Radiation Report" on FoxNews. Probably both.

There are a number of reasons for this, from a need for spectacle to a human desire for instant gratification (how does this result profit me? HOW CAN I LIVE LONGER, RIGHT NOW!?), but can be best summed up as a mixture of Hanlon's Razor and scientific ignorance. When someone only understands something broadly [if that], they can have a hard time understanding the specifics. When people fail to understand the specifics, and how those specifics relate to the whole, they fall back on a mixture of "common sense" and interpretations that fulfill their world-view. If I say that X amount of Y is shown to trigger a Z increase in muscle response time, it is probably only a matter of time that someone suggests taking nX to get +nZ speed, though nothing like that was concluded.

Gary Gutting talks about such issues in his recent

As Nancy Cartwright, a prominent philosopher of science, has recently emphasized, the very best randomized controlled test in itself establishes only that a cause has a certain effect in a particular kind of situation. For example, a feather and a lead ball dropped from the same height will reach the ground at the same time - but only if there is no air resistance. Typically, scientific laws allow us to predict a specific behavior only under certain conditions. If those conditions don't hold, the law doesn't tell us what will happen.

False. This is wrong. Scientific laws do predict things in a perfect world, but they also provide a model of how to predict things in an "imperfect" (i.e., real) one. The law of gravity tells us that gravity acts upon the feather and the ball in the same way. Air resistance, distance to ground, imparted velocity, and many other factors will impact how long it takes those items to actually strike the ground, but this is not to say that the law of gravity depends on air resistance. I am fairly sure that's not what Gutting is saying, but that is how he said it [he confuses the language between law and theory and model in other places, this is just his most egregious failure of logic]. You can read Cartwright's in-context quote through

Recall the logic of RCTs [randomised controlled trials]. The circumstances there are ideal for ensuring "the treatment caused the outcome in some members of the study" - ie, they are ideal for supporting "it-works-somewhere" claims. But they are in no way ideal for other purposes; in particular they provide no better base for extrapolating or generalising than knowledge that the treatment caused the outcome in any other individuals in any other circumstances.

Of course, in real-world science, the best studies work hard to replicate results across many tests, featuring differing controls and differing factors and conditions, to try and weed the many factors that throw up false positives. A mixture of biological complexity and ethics considerations makes weeding out these many, many factors difficult in biology—especially human biology, which we have historically wanted to place above the crude analysis of natural philosophy—but to a degree we are getting there, one foot in front of the other. Is there ever going to be some perfect understanding of the human body that will give incontrovertible results? Probably not. Each organism is a complex system that is a sum of its parts and then some. The complexity explodes to perhaps an irrational state, if you what you mean by "rational" is "summable in concise, always perfectly precise and accurate rules" [hence things like LD50]. Will we get to a point where we can be 99% sure of how to address something? Yes. Probably. And then we'll probably lose it and then gain it back. On a long enough timeline, great temples to science will be built up and cast down to be built up again. Whether or not your popular press reporters ever want to accurately cover that is a tricky situation. They certainly could, but the results will often be less certain than I think the press is willing to report upon. The word "may" is the watch-word of many a scientific write-up in a local newspaper, though rarely does the text feel like it honestly convey the proper sense of possibility.

On Matters of Popularizing Science

OTHER BLOTS THIS MONTH: April 2013

dickens of a blog

Written by Doug Bolden

For those wishing to get in touch, you can contact me in a number of ways

This work is licensed under a Creative Commons Attribution-ShareAlike 3.0 Unported License.

The longer, fuller version of this text can be found on my FAQ: "Can I Use Something I Found on the Site?".

"The hidden is greater than the seen."